具体文件:机器学习作业四

Neural Networks & Deep Learning

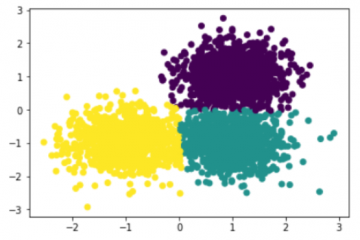

在这一节中,我们将构建一个三层的神经网络来处理手写数字识别问题,之后我们将运用AdaGrad、RMSprop、Momentum、Nesterov Momentum和Adam优化算法来加速梯度下降的过程,首先我们先来实现一个简单的神经网络。

1. 导入所需的Python库

In [4]:

from google.colab import drive

drive.mount('/content/drive')

Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount("/content/drive", force_remount=True).

In [5]:

import sys

from google.colab import drive

drive.mount('/content/drive')

sys.path.append('/content/drive/MyDrive/ML_HW/HW4_neural_network')

Drive already mounted at /content/drive; to attempt to forcibly remount, call drive.mount("/content/drive", force_remount=True).

In [6]:

# coding: utf-8

import numpy as np

import matplotlib.pyplot as plt

from utils import load_mnist

In [7]:

from collections import OrderedDict

2. 加载数据并可视化

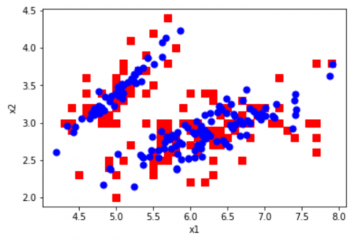

先介绍一下在这个实验中所用到的数据库MNIST,MNIST数据集是一个手写体数据集,其中每个手写数字是一张28×28的灰度图片,图片的标记为一个0-9表示的数字。 MNIST数据集一共有60000张图片用来作为训练集,10000张图片来作为测试集。

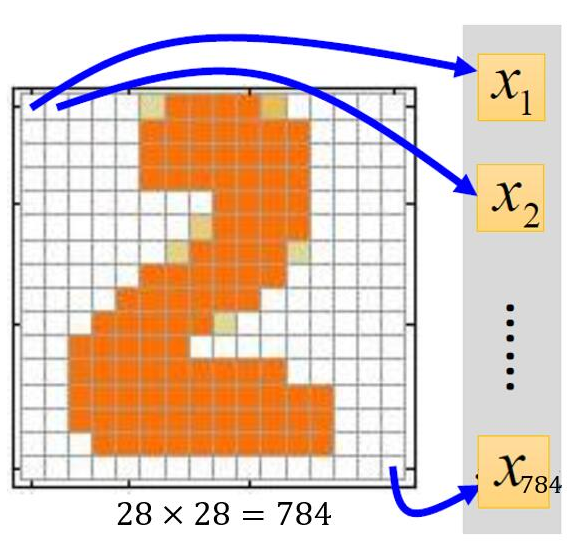

我们知道一张灰度图片一般是二维的,但是神经网络中的全连接层的输入是一个一维的向量。所以我们需要将一张二维的灰度图片“压扁”成一个一维的向量,具体如下图所示:

因此每一个样本都是一个784维的向量。

在处理多分类任务时,我们可以使用softmax来进行处理,这里的手写数字识别任务就是一个多分类任务,共包含有10类,分别用数字0-9表示,而在softmax中,每一类可以表示为一个向量,所以我们需要将类对应的符号标记转化成一个向量表示,这就是one-hot向量,比如,在手写数字识别中,数字0和1对应的one-hot向量分别为:

在训练集中,我们需要把样本的标记转化为one-hot向量。

In [8]:

#加载训练集或测试集

path = '/content/drive/MyDrive/ML_HW/HW4_neural_network/MNIST Data' #数据集文件所在目录

# 加载训练集合测试集

# 设置normalization为True,将数据缩放到[0,1]之间

# 设置one_hot_label为True,将标签转化为one_hot向量

(x_train, y_train), (x_test, y_test) = load_mnist(path, normalize=True, one_hot_label=True)

In [9]:

print('The shape of X_train is:',x_train.shape)

print('The shape of Y_train is:',y_train.shape)

print('The shape of X_test is:',x_test.shape)

print('The shape of Y_test is:',y_test.shape)

The shape of X_train is: (60000, 784)

The shape of Y_train is: (60000, 10)

The shape of X_test is: (10000, 784)

The shape of Y_test is: (10000, 10)

下面在训练集中找几个图片看一下。

In [10]:

fig = plt.figure()

ax1 = fig.add_subplot(141)

ax1.imshow(x_train[1,:].reshape(28, 28), cmap='Greys')

ax2 = fig.add_subplot(142)

ax2.imshow(x_train[2,:].reshape(28,28), cmap='Greys')

ax3 = fig.add_subplot(143)

ax3.imshow(x_train[3,:].reshape(28,28), cmap='Greys')

ax4 = fig.add_subplot(144)

ax4.imshow(x_train[4,:].reshape(28,28), cmap='Greys')

plt.show()

print('one hot 标签:',y_train[1,:],y_train[2,:],y_train[3,:],y_train[4,:])

print('对应的实际标签:',np.argmax(y_train[1,:]),np.argmax(y_train[2,:]),np.argmax(y_train[3,:]),np.argmax(y_train[4,:]))

one hot 标签: [1. 0. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 1. 0. 0. 0. 0. 0.] [0. 1. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]

对应的实际标签: 0 4 1 9

3. 全连接神经网络

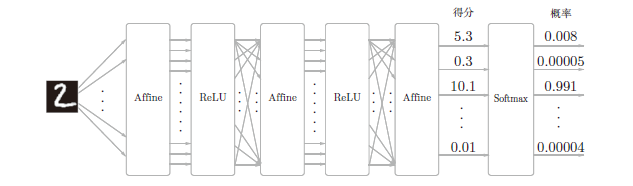

接下来,我们来搭建一个三层全连接神经网络。网络结构如下图所示:

对公式所用符号的说明:

一般算神经网络层数不算输入层,所以上图只有3层。用右上角的方括号表示相应的层,所有第1层的权重为

,第1层的偏置项

为

(图中未标出),第1层的激活值

为

。

前两层的激活函数使用ReLU,最后一层使用Softmax进行分类

3.1 初始化网络参数

我们规定第1层的神经元个数为300,第二层个数为300,最后一层为10.输入向量的维度为784,那么整个网络对应的参数也就可以确定了。

的shape为

,

的shape为

的shape为

,

的shape为

的shape为

,

的shape为

这里使用随机正态分布再乘上比例因子0.01来初始化, 对

都初始化为0.

Hint: 使用np.random.randn(),np.zeros()

In [11]:

def initialize_parameters(input_size, hidden_size, output_size, weight_init_std):

"""

@param input_size:输入向量维度

@param hidden_size:中间神经元个数

@param output_size:输出层神经元个数

@param weight_init_std:比例因子

"""

np.random.seed(1)

params = {}

### START CODE HERE ###

params['W1'] = weight_init_std*np.random.randn(input_size,hidden_size)

params['b1'] = np.zeros(hidden_size)

params['W2'] = weight_init_std*np.random.randn(hidden_size,hidden_size)

params['b2'] = np.zeros(hidden_size)

params['W3'] = weight_init_std*np.random.randn(hidden_size,output_size)

params['b3'] = np.zeros(output_size)

### END CODE HERE ###

return params

3.2 定义神经网络的每一层

我们将构成神经网络的层实现为一个单独的类

我们先来实现ReLU层

ReLU层

激活函数ReLU的表达式为:

In [12]:

class Relu:

def __init__(self):

self.mask = None

def forward(self, x):

self.mask = (x <= 0)

out = x.copy()

### START CODE HERE ###

out[self.mask] = 0

### END CODE HERE ###

return out

def backward(self, dout):

### START CODE HERE ###

dout[self.mask] = 0

### END CODE HERE ###

dx = dout

return dx

测试一下效果

In [13]:

relu = Relu()

x = np.array( [[1.0, -0.5], [-2.0, 3.0]] )

relu.forward(x), relu.backward(x)

Out[13]:

(array([[1., 0.],

[0., 3.]]), array([[1., 0.],

[0., 3.]]))

Affine层

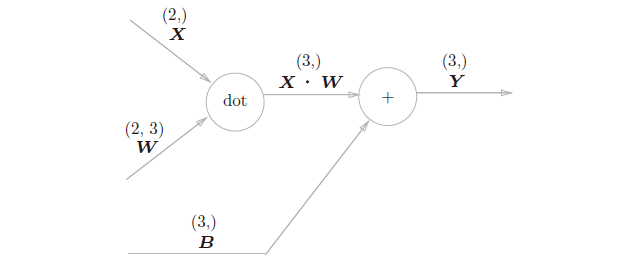

神经网络的正向传播中,为了计算加权信号的总和,使用了矩阵的乘积运算,回顾一下公式:即

Y = np.dot(X, W) + B

假设X,W,B的形状分别为(2,)、(2,3)、(3,)

现在将这里进行的求矩阵的乘积与偏置的和的运算用计算图表示出来。将乘积运算用“dot”节点表示的话,则np.dot(X, W) + B的运算如图所示:

以矩阵为对象的反向传播,按矩阵的各个元素进行计算时,步骤和以标量为对象的计算图相同。实际写 一下的话,可以推导得到:

根据这个式子我们可以写出计算图的反向传播:

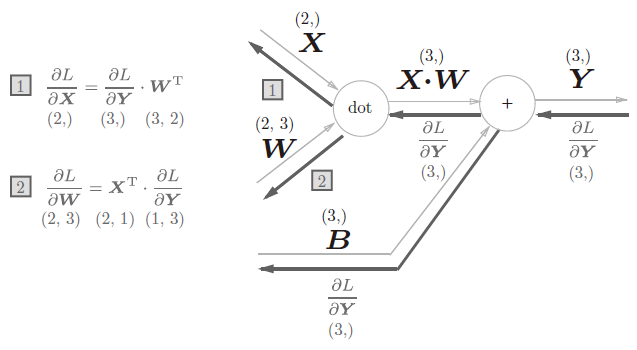

前面介绍的Affine层的输入是以单个数据为对象的,现在我们考虑N个数据一起进行正向传播,计算图如下:

与刚刚不同的是,现在输入X的形状是(N, 2)。之后就和前面一样,在计算图上进行单纯的矩阵计算。反向传播时,如果注意矩阵的形状,就可以和前面一样推导出和

。

In [14]:

class Affine:

def __init__(self, W, b):

self.W =W

self.b = b

self.x = None

self.original_x_shape = None

# 权重和偏置参数的导数

self.dW = None

self.db = None

def forward(self, x):

self.original_x_shape = x.shape

x = x.reshape(x.shape[0], -1)

self.x = x

### START CODE HERE ###

out = np.dot(self.x,self.W)+self.b

### END CODE HERE ###

return out

def backward(self, dout):

dx = np.dot(dout, self.W.T)

### START CODE HERE ###

self.dW = np.dot(self.x.T,dout)

self.db = np.sum(dout,axis=0)

### END CODE HERE ###

dx = dx.reshape(*self.original_x_shape) # 还原输入数据的形状(对应张量)

return dx

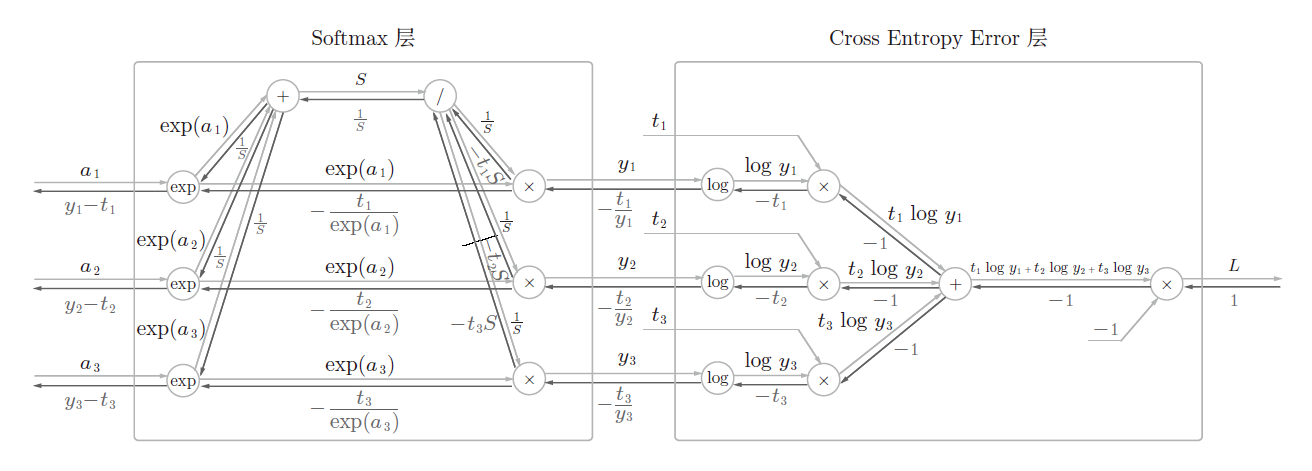

Softmax-with-Loss层

softmax函数会将输入值正规化后输出,如图所示:

具体公式为表示为向量

的第

个分量。

函数这里直接给出

In [15]:

def softmax(x):

x = x.T

x = x - np.max(x, axis=0)

y = np.exp(x) / np.sum(np.exp(x), axis=0)

return y.T

在softmax中,我们一般使用的损失函数为交叉熵函数:

其中,是类别的数量,在本次实验中即为10.

成本函数为:

这里是mini-batch的大小。因为训练集有60000个,我们不能直接把

大小的输入直接放入神经网络计算。因此,每次就选择一部分来进行前向传播,所以输入的大小是

. Hint: 最后的Loss是个标量

In [16]:

def cross_entropy_error(pred, y):

if pred.ndim == 1:

y = y.reshape(1, y.size)

pred = pred.reshape(1, pred.size)

# 监督数据是one-hot-vector的情况下,转换为正确解标签的索引

if y.size == pred.size:

y = y.argmax(axis=1)

batch_size = pred.shape[0]

res = None

res = -np.sum(np.log(pred[:, y] + 1e-7)) / batch_size

return res

下面来实现Softmax 层。考虑到这里也包含作为损失函数的交叉熵误差(cross entropy error),所以称为“Softmax-with-Loss 层”。Softmax-with-Loss 层(Softmax函数和交叉熵误差)的计算图如图所示。

可以看到,Softmax-with-Loss 层有些复杂。这里只给出了最终结果。

In [17]:

class SoftmaxWithLoss:

def __init__(self):

self.loss = None

self.pred = None # softmax的输出

self.y = None # 监督数据

def forward(self, x, y):

self.y = y

self.pred = softmax(x)

self.loss = cross_entropy_error(self.pred, self.y)

return self.loss

def backward(self, dout=1):

batch_size = self.y.shape[0]

if self.y.size == self.pred.size: # 监督数据是one-hot-vector的情况

dx = (self.pred - self.y) / batch_size

else:

dx = self.pred.copy()

dx[np.arange(batch_size), self.y] -= 1

dx = dx / batch_size

return dx

3.3 搭积木

现在我们把之前的每一层组合在一起就能搭成我们自己的三层神经网络了。 神经网络学习的步骤大致有5步:

- 初始化权重

- 随机选择一部分数据

- 计算梯度

- 更新参数

- 重复步骤2,3,4

In [18]:

class TwoLayerNet:

def __init__(self, input_size, hidden_size, output_size, weight_init_std = 0.01):

# 初始化权重

self.params = initialize_parameters(input_size, hidden_size, output_size, weight_init_std)

# 记录训练次数 adam里要用

self.t = 0

# 生成层

self.layers = OrderedDict()

self.layers['Affine1'] = Affine(self.params['W1'], self.params['b1'])

self.layers['Relu1'] = Relu()

self.layers['Affine2'] = Affine(self.params['W2'], self.params['b2'])

self.layers['Relu2'] = Relu()

self.layers['Affine3'] = Affine(self.params['W3'], self.params['b3'])

self.lastLayer = SoftmaxWithLoss()

def predict(self, x):

# 前向传播

pred = x.copy()

for layer in self.layers.values():

### START CODE HERE ###

pred = layer.forward(pred)

### END CODE HERE ###

return pred

def loss(self, x, y):

# 计算交叉熵误差

### START CODE HERE ###

pred = self.predict(x)

loss = self.lastLayer.forward(pred,y)

### END CODE HERE ###

return loss

def accuracy(self, x, y):

# 输入数据x和标签y,输出当前神经网络的预测准确率

accuracy = None

pred = self.predict(x)

pred = np.argmax(pred, axis=1)

if y.ndim != 1:

y = np.argmax(y, axis=1)

accuracy = np.sum(pred == y) / float(x.shape[0])

return accuracy

def gradient(self, x, y):

# 前向传播

self.loss(x, y)

# 反向传播

dout = 1

dout = self.lastLayer.backward(dout)

layers = list(self.layers.values())

layers.reverse()

for layer in layers:

dout = layer.backward(dout)

# 设定

grads = {}

grads['W1'], grads['b1'] = self.layers['Affine1'].dW, self.layers['Affine1'].db

grads['W2'], grads['b2'] = self.layers['Affine2'].dW, self.layers['Affine2'].db

grads['W3'], grads['b3'] = self.layers['Affine3'].dW, self.layers['Affine3'].db

return grads

3.4 进行训练

首先定义参数更新函数

In [19]:

def update_parameters(network, grads, learning_rate=0.001):

"""

使用梯度下降法更新network的参数

"""

### START CODE HERE ###

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

network.params[key] -= learning_rate*grads[key]

### END CODE HERE ###

return

然后定义训练函数

In [20]:

def train_network(network, update_params_method, iters_num, train_size, batch_size, learning_rate):

train_loss_list = []

train_acc_list = []

test_acc_list = []

iter_per_epoch = max(train_size / batch_size, 1)

for i in range(iters_num):

batch_mask = np.random.choice(train_size, batch_size)

x_batch = x_train[batch_mask]

t_batch = y_train[batch_mask]

network.t += 1

# 计算梯度

grad = network.gradient(x_batch, t_batch)

# 更新梯度

update_params_method(network, grad, learning_rate)

loss = network.loss(x_batch, t_batch)

train_loss_list.append(loss)

if i % iter_per_epoch == 0:

train_acc = network.accuracy(x_train, y_train)

test_acc = network.accuracy(x_test, y_test)

train_acc_list.append(train_acc)

test_acc_list.append(test_acc)

print("Train acc:{:<.6f}\tTest acc:{:<.6f}".format(train_acc, test_acc))

In [21]:

# 读入数据

(x_train, y_train), (x_test, y_test) = load_mnist(path, normalize=True, one_hot_label=True)

In [22]:

network = TwoLayerNet(input_size=784, hidden_size=300, output_size=10)

In [23]:

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.1

train_network(network, update_parameters, iters_num, train_size, batch_size, learning_rate)

Train acc:0.087200 Test acc:0.086500

Train acc:0.881483 Test acc:0.887300

Train acc:0.924683 Test acc:0.922100

Train acc:0.946400 Test acc:0.946400

Train acc:0.958733 Test acc:0.954900

Train acc:0.969550 Test acc:0.964600

Train acc:0.974883 Test acc:0.968500

Train acc:0.978117 Test acc:0.971100

Train acc:0.981833 Test acc:0.972100

Train acc:0.984933 Test acc:0.975100

Train acc:0.987933 Test acc:0.978300

Train acc:0.988950 Test acc:0.977200

Train acc:0.987800 Test acc:0.975900

Train acc:0.991833 Test acc:0.978300

Train acc:0.993367 Test acc:0.978200

Train acc:0.993417 Test acc:0.978300

Train acc:0.992333 Test acc:0.978400

3.5 梯度下降优化算法

下面回顾几种上课讲过的优化算法,注意它们之间的差异与联系。

3.5.1AdaGrad

当收敛到最低值附近时,因为步长

大小固定的原因,

会在最低值附近徘徊,而不能到达最低值。因此,AdaGrad的想法是随着迭代次数的增加降低学习率

,学习率

衰减的方式是

其中t表示第t次迭代。

如果梯度数值小,的移动步长小,

在坡度平缓的区域内下降速度会变慢。AdaGrad使用均方根来加快

在平缓区域的下降速度。均方根的表示为

其中

表示历史的梯度值。AdaGrad 的更新参数公式是

在坡度平缓的区域,均方根的数值小,梯度除以一个数值小的数会变大,从而加大了

移动步长,也因此加快梯度下降速度。但是,AdaGrad的缺点是,随着迭代次数的增大,均方根会越来越大,梯度趋近于0,导致训练提前停止。为了防止分母为0,我们给分母加上一个小数值

。

我们可以看到分母里会计算所有历史梯度值的平方和,所以在实现的时候不用保存所有的历史梯度值,只需要保存一个纪录所有历史梯度平方和的值即可。每个参数的历史梯度和初始值为0。

In [24]:

def initialize_grads_squared(network):

"""

初始化历史梯度和

"""

grads_squared = {}

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

grads_squared[key] = np.zeros(network.params[key].shape)

return grads_squared

In [25]:

network = TwoLayerNet(input_size=784, hidden_size=300, output_size=10)

grads_squared = initialize_grads_squared(network)

In [26]:

def update_parameters_with_adagrad(network, grads, learning_rate=0.001, epsilon = 1e-7):

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

### START CODE HERE ###

#计算历史梯度平方和

grads_squared[key] += grads[key]**2

network.params[key] -= learning_rate*grads[key]/(np.sqrt(grads_squared[key])+epsilon)

### END CODE HERE ###

return

In [27]:

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.001

train_network(network, update_parameters_with_adagrad, iters_num, train_size, batch_size, learning_rate)

Train acc:0.227933 Test acc:0.223100

Train acc:0.863117 Test acc:0.864500

Train acc:0.881933 Test acc:0.882900

Train acc:0.891633 Test acc:0.891900

Train acc:0.896583 Test acc:0.896700

Train acc:0.900600 Test acc:0.900800

Train acc:0.903317 Test acc:0.903600

Train acc:0.906050 Test acc:0.906800

Train acc:0.907450 Test acc:0.908900

Train acc:0.910583 Test acc:0.909300

Train acc:0.912300 Test acc:0.913000

Train acc:0.914333 Test acc:0.913100

Train acc:0.914733 Test acc:0.914100

Train acc:0.916083 Test acc:0.914200

Train acc:0.918217 Test acc:0.916600

Train acc:0.918833 Test acc:0.917100

Train acc:0.919400 Test acc:0.919100

3.5.2 RMSprop

从AdaGrad算法的公式可看出,所有时刻的梯度都对当前的参数更新有影响。如果早先的梯度并不是一个正确的方向,那么这些糟糕的梯度还是会影响到当前的参数更新。因此,RMSprop相当于就是只记录当前时刻前的某一段历史梯度和而不是所有历史梯度和。

RMSprop算法的公式如下:

这里

是超参数,一般设为0.999,也不会调它。

是防止分母为0。另外值得注意的是,因为要整合这几个算法在一起,而Adam算法又融合了各种算法,所以,关于优化算法的超参数的命名与Adam里保持一致,公式里的

用下面参数

beta代替。这些算法几乎都要保存一些变量,它们的初始化基本与AdaGrad初始化的方法一致,所以这部分初始化的代码就不重复了。

In [28]:

def update_parameters_with_rmsprop(network, grads, learning_rate=0.001, epsilon = 1e-7, beta=0.999):

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

### START CODE HERE ###

#公式里的u就是这里的 grads_squared

grads_squared[key] = beta*grads_squared[key]+(1-beta)*(grads[key]**2)

network.params[key] -= learning_rate*grads[key]/(np.sqrt(grads_squared[key])+epsilon)

### END CODE HERE ###

return

In [29]:

network = TwoLayerNet(input_size=784, hidden_size=300, output_size=10)

grads_squared = initialize_grads_squared(network)

In [30]:

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.001

train_network(network, update_parameters_with_rmsprop, iters_num, train_size, batch_size, learning_rate)

Train acc:0.098633 Test acc:0.095800

Train acc:0.968333 Test acc:0.963300

Train acc:0.978600 Test acc:0.970200

Train acc:0.984600 Test acc:0.973200

Train acc:0.987267 Test acc:0.974800

Train acc:0.990567 Test acc:0.977800

Train acc:0.991267 Test acc:0.976000

Train acc:0.994117 Test acc:0.979300

Train acc:0.993517 Test acc:0.977300

Train acc:0.995100 Test acc:0.976800

Train acc:0.995517 Test acc:0.977700

Train acc:0.997583 Test acc:0.979800

Train acc:0.997067 Test acc:0.976900

Train acc:0.997733 Test acc:0.977600

Train acc:0.997633 Test acc:0.977400

Train acc:0.996183 Test acc:0.975100

Train acc:0.996417 Test acc:0.975900

5.3.3 Momentum

动量梯度下降(Gradient Descent with Momentum)基本思想就是计算梯度的指数加权平均数,并利用该指数加权平均数更新权重。具体过程为:

这里的一般取0.9。

In [31]:

def initialize_velocity(network):

v = {}

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

v[key] = np.zeros((network.params[key]).shape)

return v

In [32]:

network = TwoLayerNet(input_size=784, hidden_size=300, output_size=10)

v = initialize_velocity(network)

In [33]:

def update_parameters_with_momentum(network, grads, learning_rate=0.001, beta=0.9):

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

### START CODE HERE ###

#公式里的u就是这里的 grads_squared

v[key] = beta*v[key]+learning_rate*grads[key]

network.params[key] -= v[key]

### END CODE HERE ###

return

In [34]:

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.001

train_network(network, update_parameters_with_momentum, iters_num, train_size, batch_size, learning_rate)

Train acc:0.087333 Test acc:0.086700

Train acc:0.112367 Test acc:0.113500

Train acc:0.262567 Test acc:0.265500

Train acc:0.388550 Test acc:0.400500

Train acc:0.688550 Test acc:0.699800

Train acc:0.783200 Test acc:0.790000

Train acc:0.823617 Test acc:0.830000

Train acc:0.848867 Test acc:0.852900

Train acc:0.867650 Test acc:0.870500

Train acc:0.880717 Test acc:0.884000

Train acc:0.889933 Test acc:0.889700

Train acc:0.896033 Test acc:0.894500

Train acc:0.900233 Test acc:0.899000

Train acc:0.905300 Test acc:0.902500

Train acc:0.909533 Test acc:0.906300

Train acc:0.912867 Test acc:0.910400

Train acc:0.915367 Test acc:0.913600

3.5.4 Nesterov Momentum

Nesterov Momentum算法与Momentum不同的是在于,它会提前计算一个在速度作用后的梯度。具体算法如下:但是在实现的时候,我们是不会算一次

再算一次

的。具体编程实现时上式等价于下式:

这里的

一般取0.9。

In [35]:

def update_parameters_with_nesterov_momentum(network, grads, learning_rate=0.001, beta=0.9):

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

### START CODE HERE ###

v[key] = beta*v[key]+learning_rate*grads[key]

network.params[key] -= (beta*v[key]+learning_rate*grads[key])

### END CODE HERE ###

return

In [36]:

network = TwoLayerNet(input_size=784, hidden_size=300, output_size=10)

v = initialize_velocity(network)

In [37]:

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.001

train_network(network, update_parameters_with_nesterov_momentum, iters_num, train_size, batch_size, learning_rate)

Train acc:0.087283 Test acc:0.086500

Train acc:0.112367 Test acc:0.113500

Train acc:0.264100 Test acc:0.268100

Train acc:0.393133 Test acc:0.404900

Train acc:0.689750 Test acc:0.701400

Train acc:0.783483 Test acc:0.790400

Train acc:0.823683 Test acc:0.829800

Train acc:0.849533 Test acc:0.854100

Train acc:0.867767 Test acc:0.870600

Train acc:0.880817 Test acc:0.883900

Train acc:0.889867 Test acc:0.890000

Train acc:0.896067 Test acc:0.894900

Train acc:0.900117 Test acc:0.899000

Train acc:0.905283 Test acc:0.902500

Train acc:0.909533 Test acc:0.906200

Train acc:0.913017 Test acc:0.910300

Train acc:0.915450 Test acc:0.914000

3.5.5 Adam

Adam优化算法(Adaptive Moment Estimation)是将Momentum和RMSprop结合在一起的算法,具体过程如下从上式可以看到,在最开始更新时,

都是很小的。所以需要对早期的更新进行一个bias correction。完整公式如下

其中,一般设.

也是防止分母过小或等于0.

In [38]:

def initialize_adam(network) :

v = {}

u = {}

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

v[key] = np.zeros(np.shape(network.params[key]))

u[key] = np.zeros(np.shape(network.params[key]))

return v, u

In [39]:

network = TwoLayerNet(input_size=784, hidden_size=300, output_size=10)

v, u = initialize_adam(network)

In [40]:

def update_parameters_with_adam(network, grads, learning_rate=0.001, epsilon=1e-7, beta1=0.9, beta2=0.999):

v_corrected = {}

u_corrected = {}

t = network.t #当前迭代次数

for key in ('W1', 'b1', 'W2', 'b2', 'W3', 'b3'):

### START CODE HERE ###

v[key] = beta1*v[key]+(1-beta1)*grads[key]

v_corrected[key] = v[key]/(1-beta1**t)

u[key] = beta2*u[key]+(1-beta2)*(grads[key]**2)

u_corrected[key] = u[key]/(1-beta2**t)

network.params[key] -= learning_rate/(np.sqrt(u_corrected[key])+epsilon)*v_corrected[key]

### END CODE HERE ###

return

In [41]:

iters_num = 10000

train_size = x_train.shape[0]

batch_size = 100

learning_rate = 0.001

train_network(network, update_parameters_with_adam, iters_num, train_size, batch_size, learning_rate)

Train acc:0.227933 Test acc:0.223100

Train acc:0.958100 Test acc:0.954900

Train acc:0.972467 Test acc:0.968100

Train acc:0.979800 Test acc:0.970700

Train acc:0.985467 Test acc:0.975100

Train acc:0.989367 Test acc:0.976000

Train acc:0.989550 Test acc:0.975300

Train acc:0.991617 Test acc:0.980000

Train acc:0.992467 Test acc:0.978400

Train acc:0.993617 Test acc:0.976200

Train acc:0.995150 Test acc:0.981300

Train acc:0.994583 Test acc:0.978900

Train acc:0.995817 Test acc:0.980100

Train acc:0.997450 Test acc:0.980500

Train acc:0.997167 Test acc:0.980600

Train acc:0.997433 Test acc:0.981000

Train acc:0.997600 Test acc:0.982100

4. 总结

本次实验完整搭建了一个三层的全连接网络,使用了各种梯度更新优化算法训练MNIST数据集。

提交作业后,可以试试再把准确度提高一点?

0 条评论